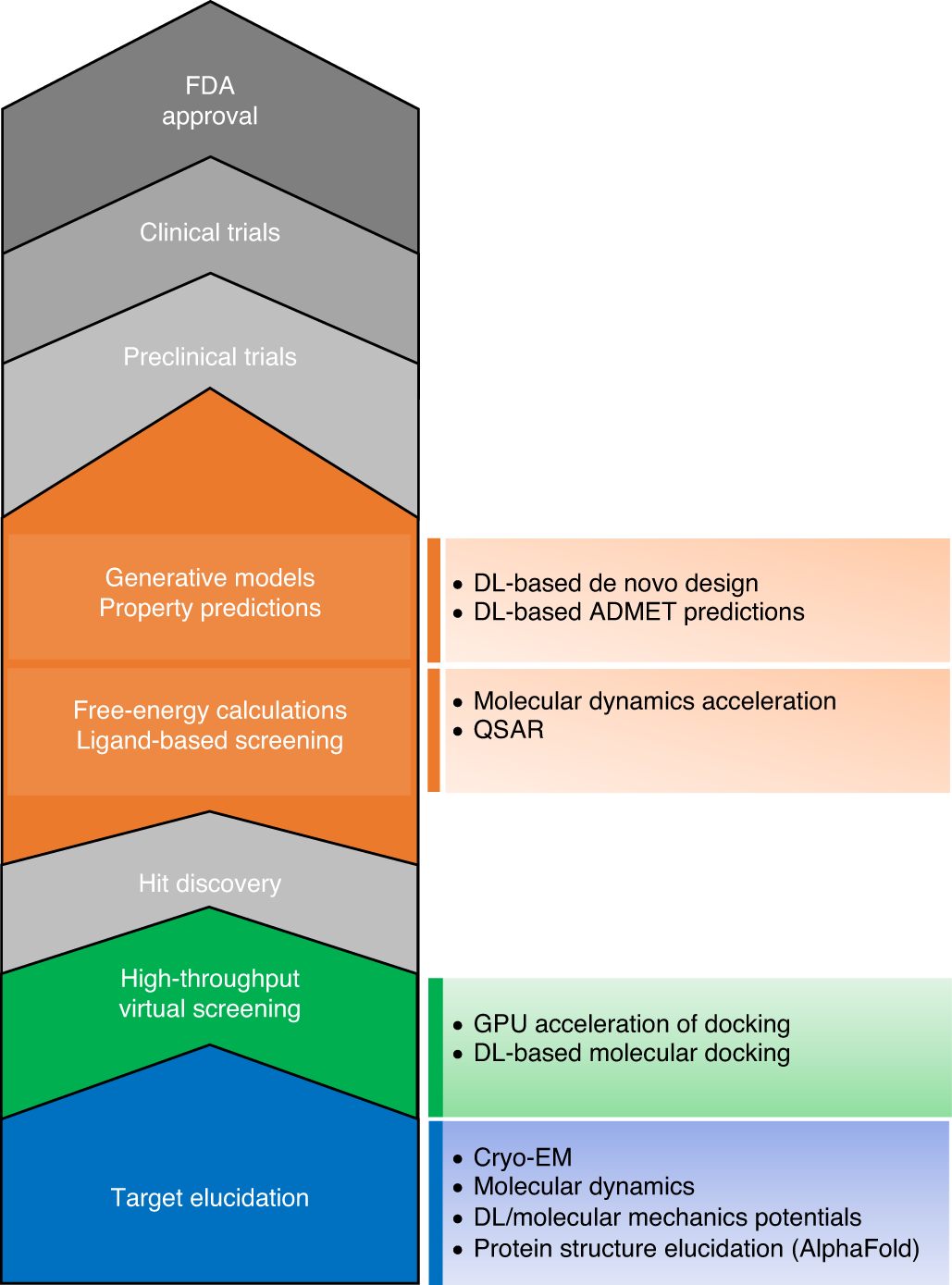

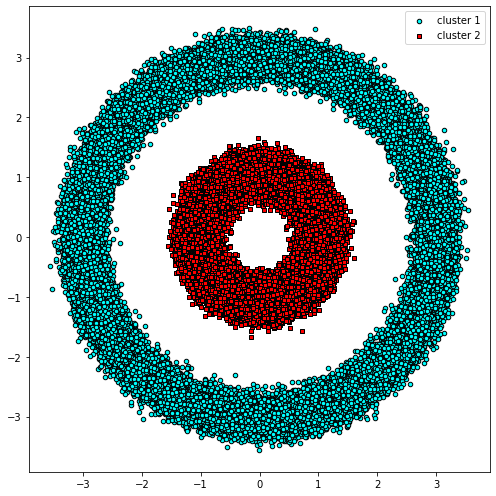

The standard Python ecosystem for machine learning, data science, and... | Download Scientific Diagram

in FAQ, link deep learning question to GPU question · Issue #8218 · scikit- learn/scikit-learn · GitHub

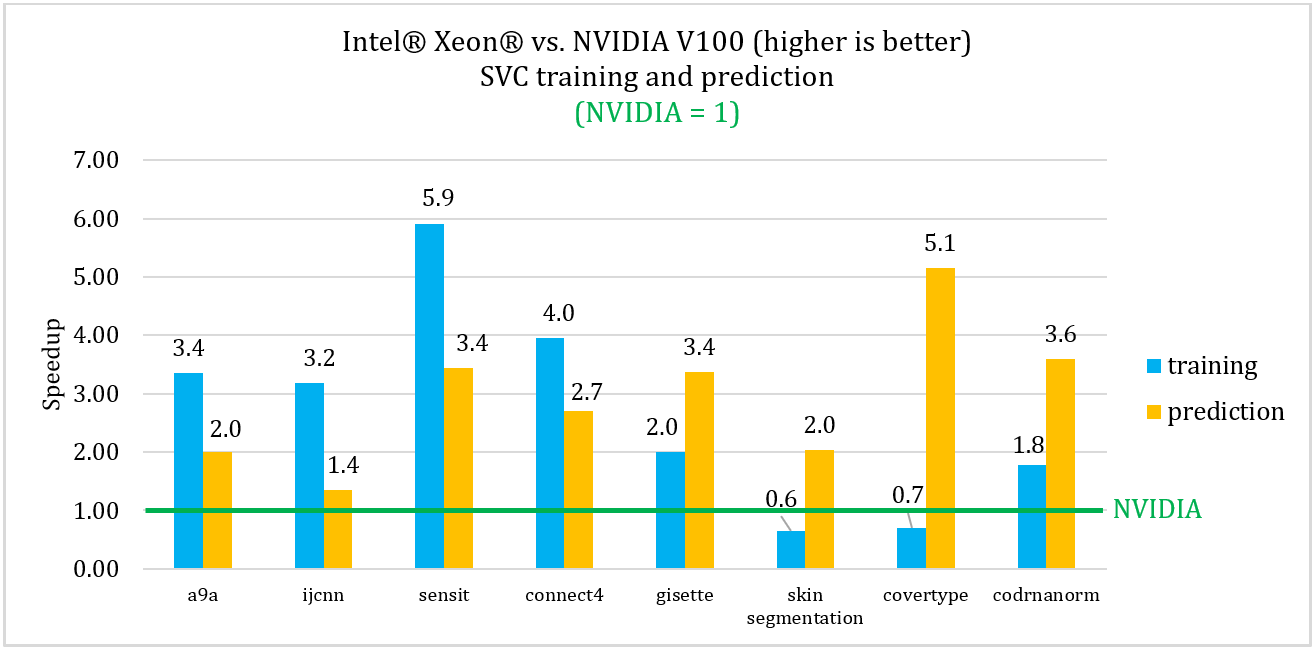

Tensors are all you need. Speed up Inference of your scikit-learn… | by Parul Pandey | Towards Data Science

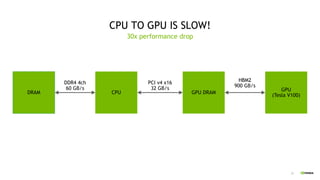

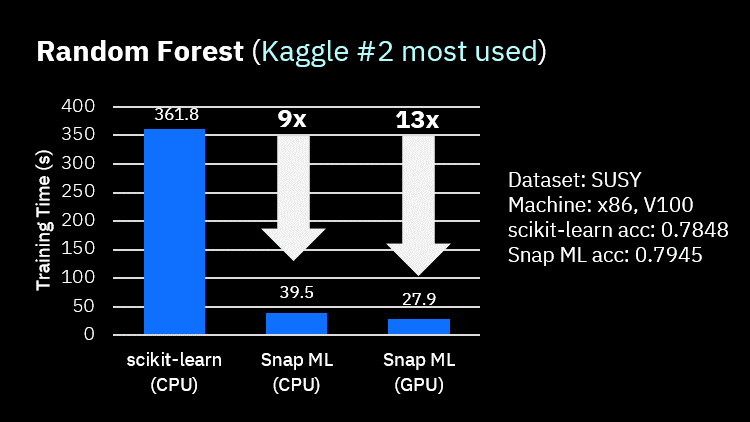

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science